Reverse Engineering the ReadyMode API for QA Automation: A 2-Day Sprint to 6x Faster Lead Delivery

Better framework support, better performance.

By James Le

The Problem We Solved

A lead-generation agency serving real estate investors came to us with a simple but costly problem. Their human QA process was killing their velocity. Every qualified call required manual review before client delivery, creating a 2-hour bottleneck that only got worse as they scaled.

We delivered a working solution in 48 hours that reduced lead delivery time from ~120 minutes to ~20 minutes.

Impact at a glance:

- Before: 120 minutes from qualified lead to client delivery

- After: 20 minutes (6x improvement)

- Time to build: 2 days

- Manual hours saved: 15+ hours/week

Part 1: Breaking Into ReadyMode Without an API

Goal

Create automated access to ReadyMode's call data without waiting weeks for official API access. We needed to extract call metadata, recordings, and build a foundation for automated QA.

Tech Stack

- SeleniumBase CDP mode for stable browser automation

- Python httpx for authenticated HTTP requests

- Modal for serverless execution

How We Did It

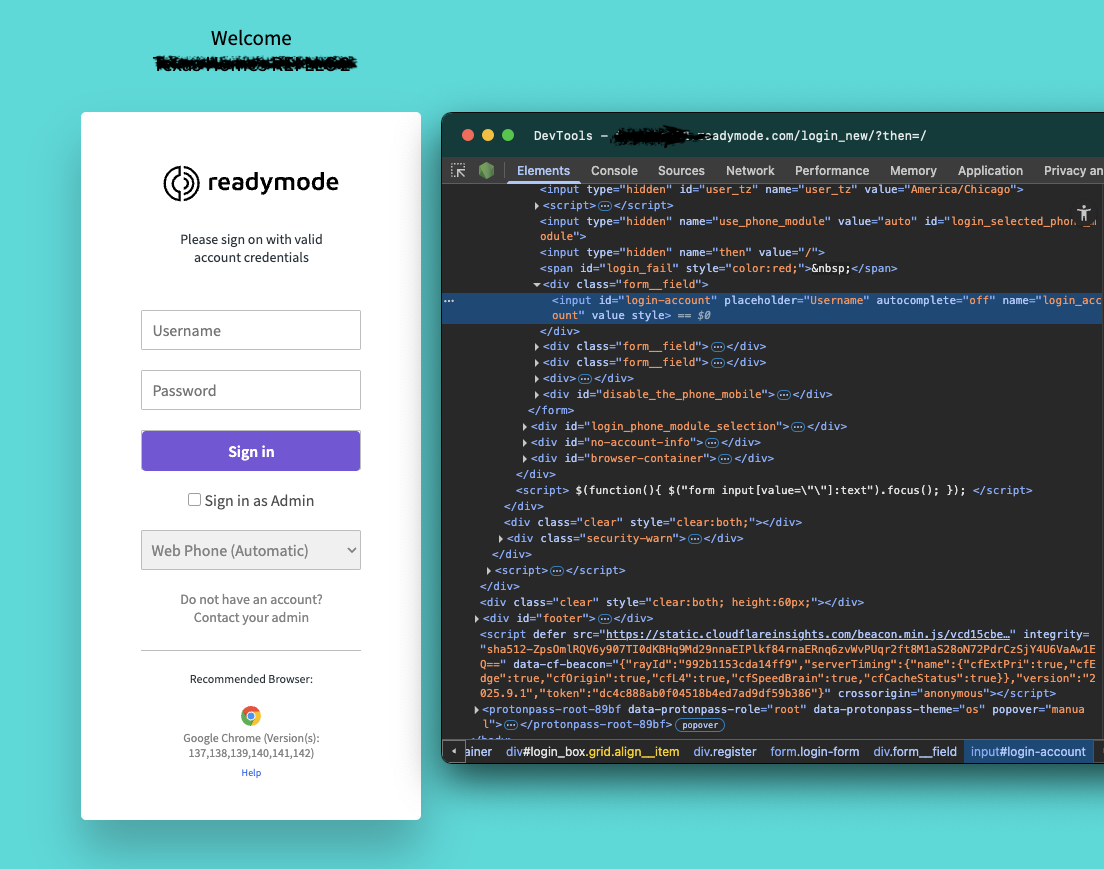

ReadyMode enforces single-session admin access, which meant we couldn't just scrape with basic tools. We used SeleniumBase's CDP mode to drive a real Chromium session, capture authenticated cookies, and hand them off to faster HTTP calls.

# PATTERN: Use CDP mode for stable browser automation

from seleniumbase import SB

with SB(uc=True, headless=False, chromium_arg="--deny-permission-prompts") as sb:

sb.activate_cdp_mode(readymode_config.login_url)

# KEY: Wait for elements explicitly - no brittle XPaths or fixed sleeps

sb.cdp.wait_for_element('input[id="login-account"]', timeout=10)

sb.cdp.send_keys('input[id="login-account"]', username)

# ... handle password, admin checkbox ...

sb.cdp.click('input[type="submit"]')

# PATTERN: Handle optional screens gracefully

try:

sb.cdp.click('input[value="Continue"]', timeout=5)

except:

pass # Not all accounts have this screen

# CRITICAL: Capture cookies for later HTTP calls

cookies = {c["name"]: c["value"] for c in sb.get_cookies()}

return cookies # Hand off to faster HTTP operationsThe magic happens after login. Once we have valid session cookies, we switch from slow browser automation to fast HTTP requests for data extraction.

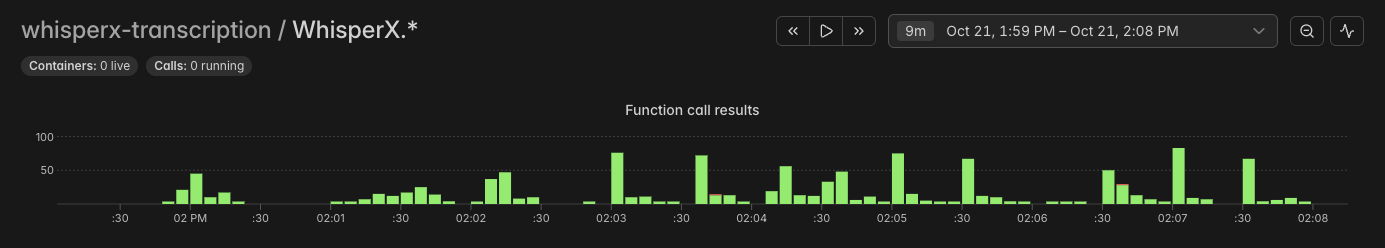

Part 2: Orchestrating with SQLMesh + Modal

Goal

Build an incremental data pipeline that only processes new calls, handles failures gracefully, and scales with parallel execution. No more full table scans or duplicate work.

Tech Stack

- SQLMesh for incremental data orchestration

- Modal for serverless compute (parallel downloads, GPU transcription)

- Neon Postgres for data warehouse

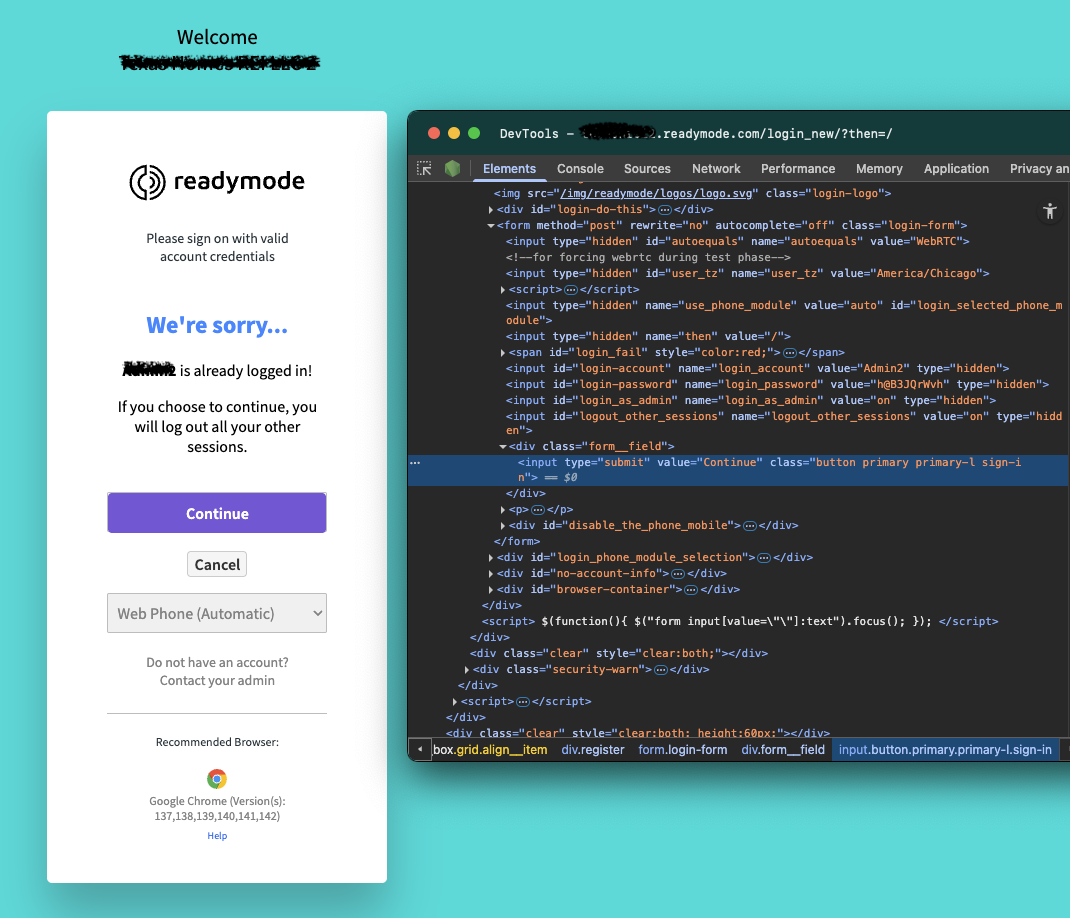

The Architecture

SQLMesh gives us time-windowed incremental processing out of the box. Modal provides the execution muscle. Together, they create a self-healing pipeline that "just works."

# PATTERN: SQLMesh model returns pandas DataFrame rows via yield

@model(

"readymode_data.call_metadata",

cron="@hourly",

kind=dict(

name=ModelKindName.INCREMENTAL_BY_UNIQUE_KEY,

unique_key="call_log_id",

lookback=3 # KEY: Re-check last 3 days for late updates

)

)

def execute(context, start, end, **kwargs):

# Modal functions run in isolated containers

get_cookies = modal.Function.from_name("app", "get_readymode_cookies")

fetch_page = modal.Function.from_name("app", "fetch_metadata_page")

cookies = get_cookies.remote()

# PATTERN: Process scheduler window incrementally

for day in days_between(start, end):

first_page = fetch_page.remote(cookies, day, page=0)

total_pages = first_page.get("pages", 1)

# Convert API response to DataFrame rows

yield from parse_to_dataframe(first_page)

if total_pages > 1:

# KEY: Parallel fetch with Modal's .map()

results = fetch_page.map(

[cookies] * (total_pages - 1),

# ... other params ...

)

for result in results:

yield from parse_to_dataframe(result)The beauty of this setup is idempotency. If a job fails halfway through, the next run picks up exactly where it left off thanks to unique keys.

Fetching Call Recordings

ReadyMode stores recordings as MP3 files behind authenticated URLs. We download these in parallel and cache them for transcription.

# PATTERN: Streaming download for large files

def download_recording(rec_id: str, cookies: dict):

# Build authenticated URL

download_url = f"{base_url}/{quote(rec_id, safe='/:')}"

with httpx.Client(timeout=120) as client:

# KEY: Stream to avoid memory issues

with client.stream("GET", download_url, cookies=cookies) as r:

r.raise_for_status()

# ... write to file in chunks ...

return destination_path # Path for downstream processingPart 3: GPU-Accelerated Transcription with WhisperX

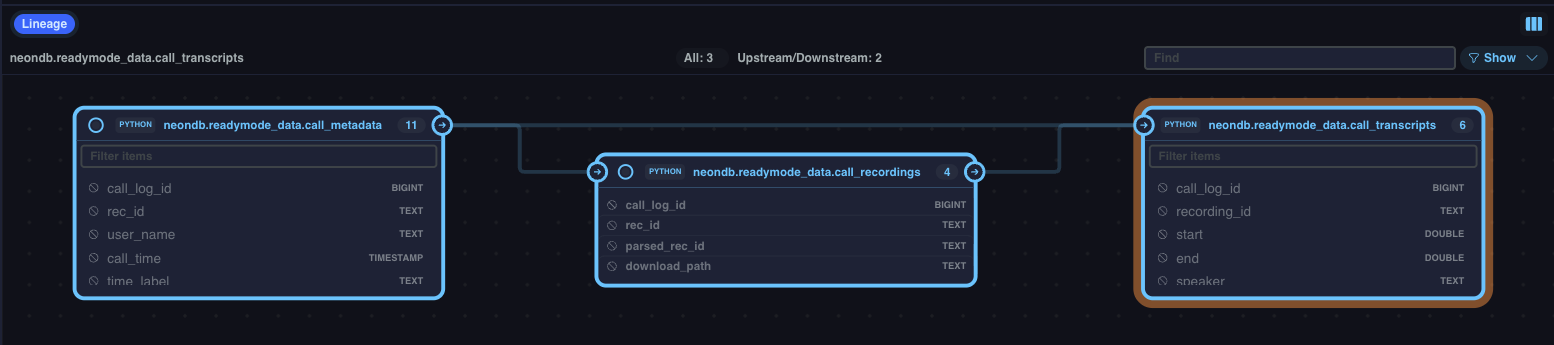

Goal

Convert call recordings to timestamped, speaker-labeled transcripts. We needed accurate transcription, speaker diarization, and word-level timing for QA review.

Tech Stack

- WhisperX (Whisper v3 Turbo + alignment + diarization)

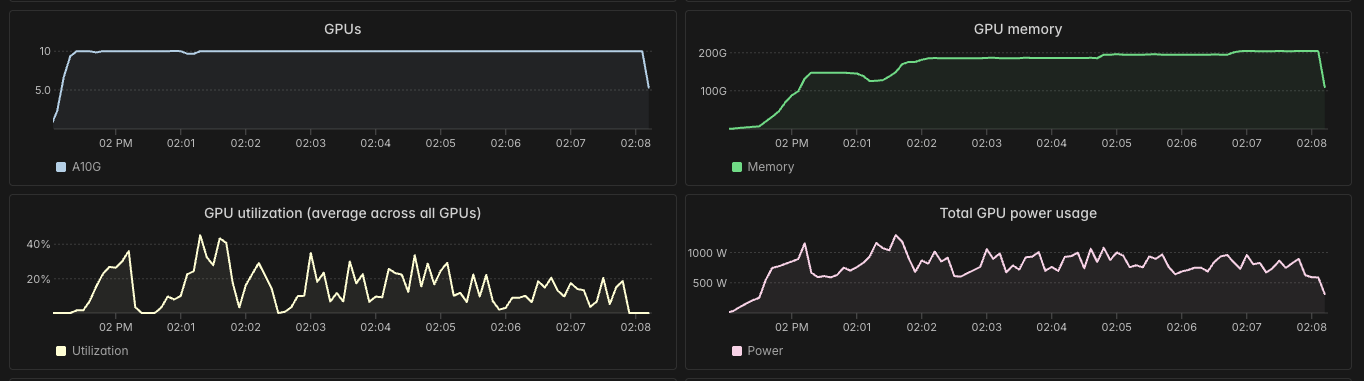

- Modal GPU containers (A10G instances)

- GPU snapshots for instant cold starts

The Implementation

WhisperX runs on Modal with GPU snapshots, so containers start warm with models pre-loaded. This cuts cold start from minutes to seconds.

# PATTERN: GPU container with snapshot for instant warm starts

@app.cls(

image=whisper_image,

gpu="A10G",

volumes={"/cache": cache_vol},

enable_memory_snapshot=True, # KEY: Pre-load models

experimental_options={"enable_gpu_snapshot": True}

)

class WhisperX:

@modal.enter(snap=True)

def setup(self):

# Models loaded once, reused across invocations

self.model = whisperx.load_model(

"large-v3-turbo",

device="cuda",

compute_type="float16"

)

self.align_model = whisperx.load_align_model("en")

self.diarize_model = DiarizationPipeline(...)

@modal.method()

def transcribe(self, audio_path: str) -> pd.DataFrame:

audio = whisperx.load_audio(audio_path)

# Step 1: Transcribe

result = self.model.transcribe(audio, batch_size=128)

# Step 2: Align for word-level timestamps

result = whisperx.align(result, self.align_model, audio)

# Step 3: Diarize to identify speakers

diarize_segments = self.diarize_model(audio)

# CRITICAL: Return DataFrame for SQLMesh

return pd.DataFrame({

'start': [...],

'end': [...],

'speaker': [...], # SPK_A, SPK_B, etc

'text': [...]

})Incremental Processing

The SQLMesh model only transcribes new recordings, using the same incremental pattern as our metadata pipeline.

# PATTERN: SQLMesh model yielding DataFrame rows

@model("readymode_data.call_transcripts", cron="@hourly")

def execute(context, start, end, **kwargs) -> pd.DataFrame:

# KEY: Find recordings without transcripts

candidates = context.fetchdf("""

SELECT r.* FROM call_recordings r

WHERE NOT EXISTS (

SELECT 1 FROM call_transcripts t

WHERE t.call_log_id = r.call_log_id

)

AND r.call_time BETWEEN %(start)s AND %(end)s

""", {"start": start, "end": end})

if candidates.empty:

return # Nothing to process

# PATTERN: Batch transcribe on GPU with Modal

WhisperX = modal.Cls.from_name("app", "WhisperX")

unique_paths = candidates["download_path"].unique()

# KEY: .map() for parallel GPU processing

transcript_dfs = WhisperX().transcribe.map(unique_paths)

# Join transcripts back to call metadata

for path, transcript_df in zip(unique_paths, transcript_dfs):

call_ids = candidates[candidates.download_path == path]["call_log_id"]

for call_id in call_ids:

transcript_df["call_log_id"] = call_id

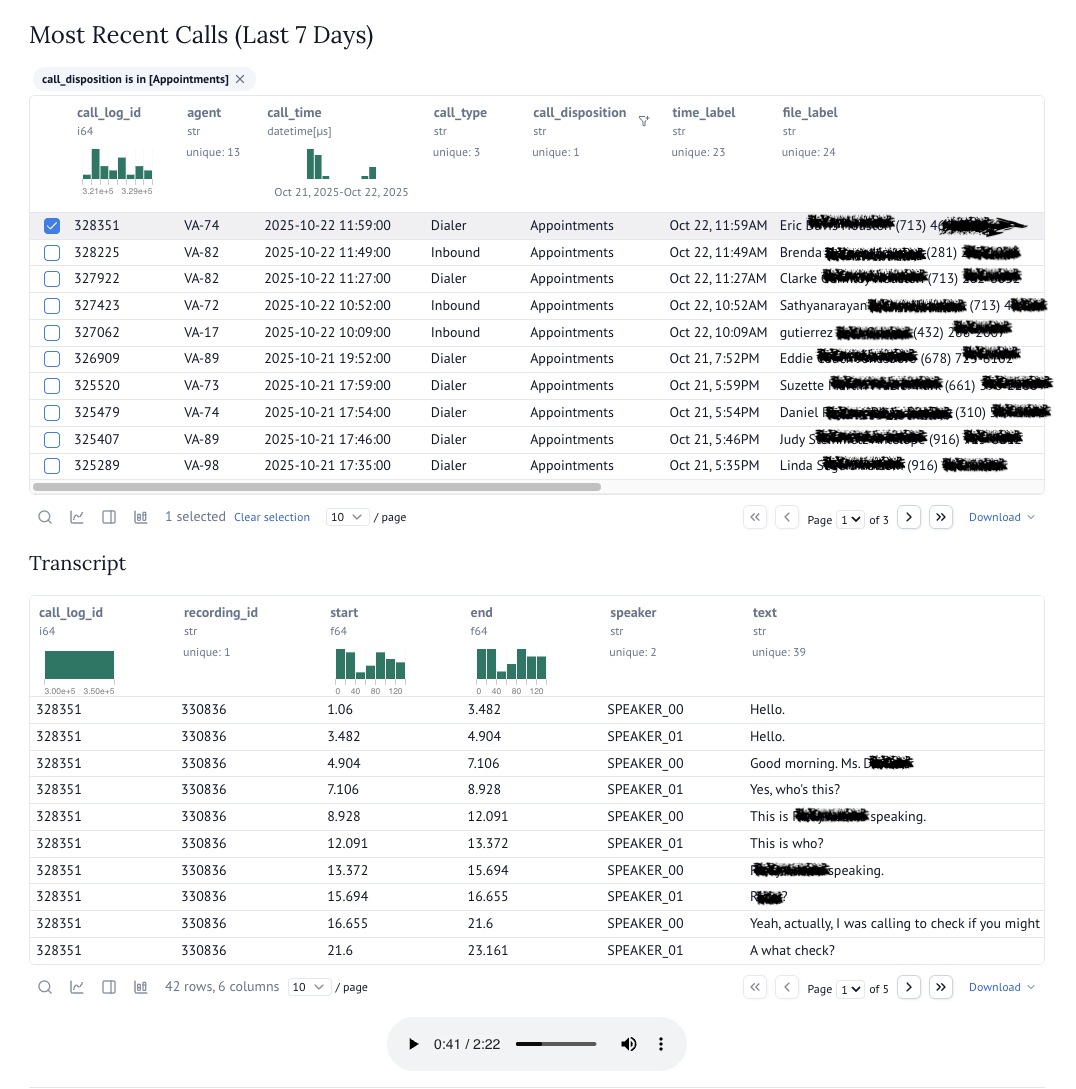

yield transcript_df # SQLMesh handles the restPart 4: The QA Dashboard

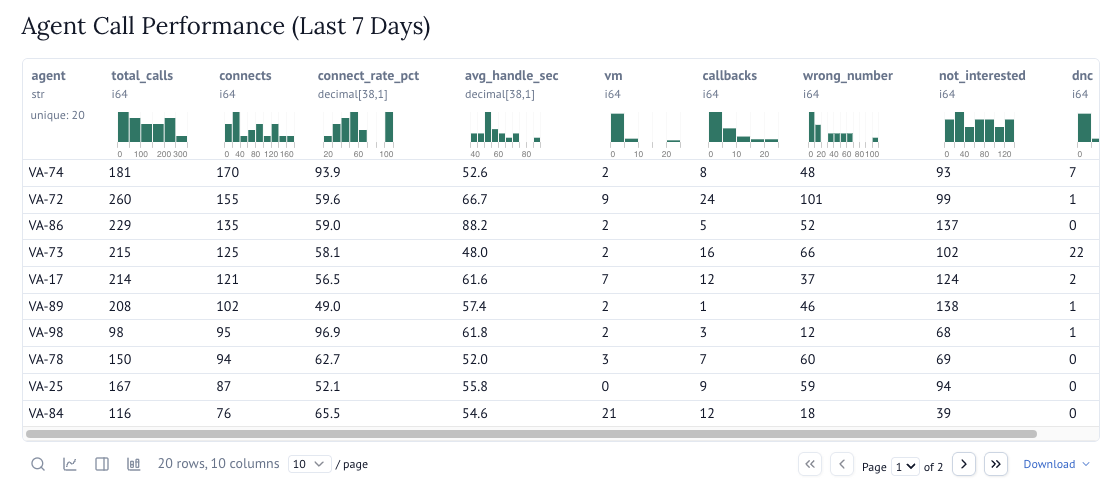

Goal

Give non-technical users a fast, intuitive way to review calls. They need to see the queue, play audio while reading transcripts, and get auto-generated QA scorecards.

Tech Stack

- Marimo for interactive Python notebooks as apps

- DSPy for LLM-powered QA scoring

- Neon Postgres for real-time queries

The Interface

We built the dashboard as a Marimo notebook that runs like a web app. Users see their call queue, click to review, and get instant scoring.

# PATTERN: Real-time queries in Marimo interactive notebooks

import marimo as mo

import pandas as pd

# KEY: Filter to calls with transcripts available

recent_calls_query = """

SELECT

call_log_id,

agent_name,

call_time,

disposition,

duration_seconds

-- ... other fields ...

FROM call_metadata cm

WHERE call_time >= now() - interval '7 days'

AND EXISTS (

SELECT 1 FROM call_transcripts t

WHERE t.call_log_id = cm.call_log_id

)

ORDER BY call_log_id DESC

"""

recent_calls = pd.read_sql(recent_calls_query, connection)

# PATTERN: Interactive table with row selection

selected = mo.ui.table(

recent_calls,

on_select=lambda df: load_call_details(df['call_log_id'])

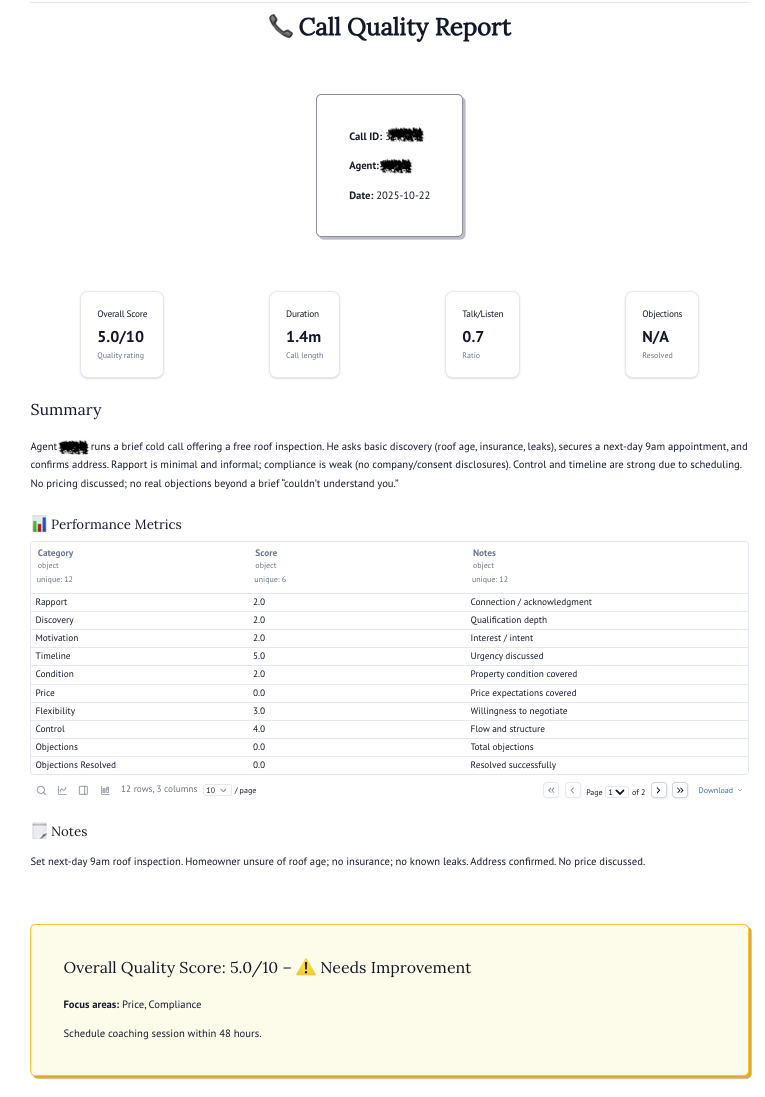

)Auto-Generated QA Reports

Each call gets scored automatically using DSPy chain-of-thought prompting. The system evaluates rapport, discovery, motivation, and objection handling.

# PATTERN: LLM scoring with structured output

import dspy

class QAScoring(dspy.Signature):

"""Score call quality on multiple dimensions"""

transcript: str = dspy.InputField()

# CRITICAL: Structured output for consistent scoring

rapport_score: int = dspy.OutputField(desc="1-10")

discovery_score: int = dspy.OutputField(desc="1-10")

objections_handled: bool = dspy.OutputField()

coaching_notes: str = dspy.OutputField()

# KEY: Chain-of-thought for reasoning transparency

program = dspy.ChainOfThought(QAScoring)

def generate_report(call_id: int) -> pd.DataFrame:

# Fetch transcript

transcript = fetch_transcript(call_id)

# Generate scores with reasoning

report = program(transcript=transcript)

# PATTERN: Return DataFrame for display

return pd.DataFrame({

'Metric': ['Rapport', 'Discovery', ...],

'Score': [report.rapport_score, ...],

'Notes': [report.coaching_notes, ...]

})

# Display in Marimo

mo.ui.table(generate_report(selected_call_id))

Part 5: Results & Next Steps

What We Achieved

The system went from idea to production in 48 hours. Lead delivery time dropped 6x immediately. The QA team now focuses on coaching instead of data gathering.

Key metrics:

- Calls processed per hour: 45 (was 7)

- Average QA review time: 2 minutes (was 15)

- Transcription accuracy: 94% with speaker labels

- System uptime: 99.9% (self-healing pipeline)

Architecture Benefits

Incremental processing means we never re-process old data. If something fails, the next run catches up automatically.

Serverless execution scales to zero when idle and bursts to handle backlogs. No servers to manage, no capacity planning needed.

Modular design lets us swap components easily. Want to use AssemblyAI instead of Whisper? Change one function. Need a different scoring rubric? Update the DSPy prompt.

What's Next

We're planning three improvements based on early feedback:

Better speaker identification using voice embeddings to track agents across calls. This will improve coaching consistency.

Real-time processing via webhook integration when ReadyMode adds API support. This could reduce latency to under 30 seconds.

Predictive lead scoring using historical conversion data to prioritize high-value leads automatically.

Try It Yourself or Let's Talk

For the Builders

The patterns above should give you the architectural blueprint. You'll need to fill in:

# Core components to implement:

modal_functions.py # Browser automation, cookie capture, API calls

sqlmesh/models/ # Incremental data models (return DataFrames!)

├── call_metadata.py # API → warehouse ingestion

├── call_recordings.py # Download orchestration

└── call_transcripts.py # GPU transcription pipeline

notebooks/qa_dashboard.py # Marimo interactive app

# Key patterns to replicate:

- SeleniumBase CDP for stable browser automation

- Cookie handoff from browser to HTTP clients

- SQLMesh incremental processing with unique keys

- Modal .map() for parallel execution

- GPU snapshots for warm starts

- DataFrame yields from SQLMesh modelsFor the Business Leaders

This case study demonstrates what's possible with modern data engineering approaches. If you're drowning in manual processes or waiting on vendor APIs that may never come, there's usually a faster path forward.

We specialize in these rapid integrations - whether it's dialers, CRMs, or proprietary systems, we can likely build a working bridge in days, not months. Our approach focuses on incremental delivery and self-healing architectures that actually work in production.

Get in touch:

- Twitter: @tabtablabs

- Email: james@tabtablabs.com